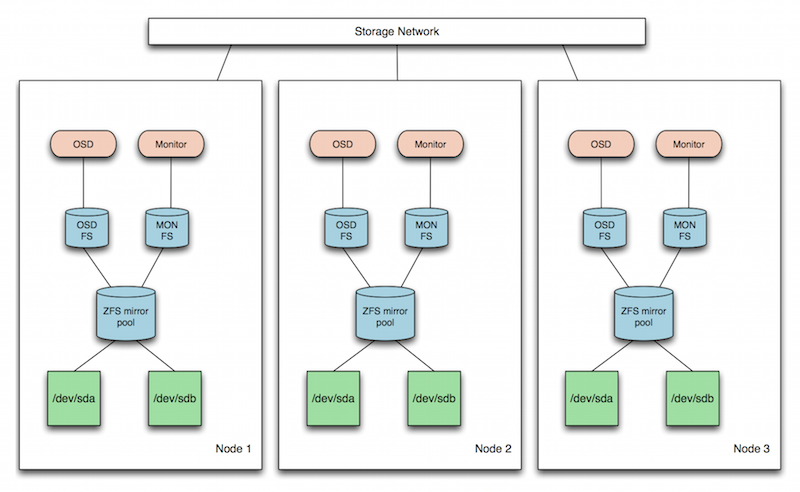

Ceph is a distributed storage system which aims to provide performance, reliability and scalability. ZFS is an advanced filesystem and logical volume manager.

ZFS can care for data redundancy, compression and caching on each storage host. It serves the storage hardware to Ceph's OSD and Monitor daemons.

Ceph can take care of data distribution and redundancy between all storage hosts.

If you want to use ZFS instead of the other filesystems supported by the ceph-deploy tool, you have follow the manual deployment steps.

I use ZFS on Linux on Ubuntu 14.04 LTS and prepared the ZFS storage on each Ceph node in the following way (mirror pool for testing):

$ zpool create -o ashift=12 storage mirror /dev/disk/by-id/foo /dev/disk/by-id/bar

$ zfs set xattr=sa storage

$ zfs set atime=off storage

$ zfs set compression=lz4 storage

This pool has 4KB blocksize, stores extended attributes in inodes, doesn't update access time and uses LZ4 compression.

On that pool I created one filesystem for OSD and Monitor each:

$ zfs create -o mountpoint=/var/lib/ceph/osd storage/ceph-osdfs

$ zfs create -o mountpoint=/var/lib/ceph/mon storage/ceph-monfs

Direct I/O is not supported by ZFS on Linux and needs to be disabled for OSD in /etc/ceph/ceph.conf, otherwise journal creation will fail.

...

[osd]

journal dio = false

...

You can enable the autostart of Monitor and OSD daemons by creating the file /var/lib/ceph/mon/ceph-foobar/upstart and /var/lib/ceph/osd/ceph-123/upstart. However, this locked up the boot process because it seemed as if Ceph is started before ZFS filesystems are available.

As a workaround I added the start commands to /etc/rc.local to make sure these where run after all other services have been started:

...

start ceph-mon id=foobar

start ceph-osd id=123

...